Edge AI for Voice and Audio: Why the Future is On-Device

And how Switchboard helps you build it

Follow us

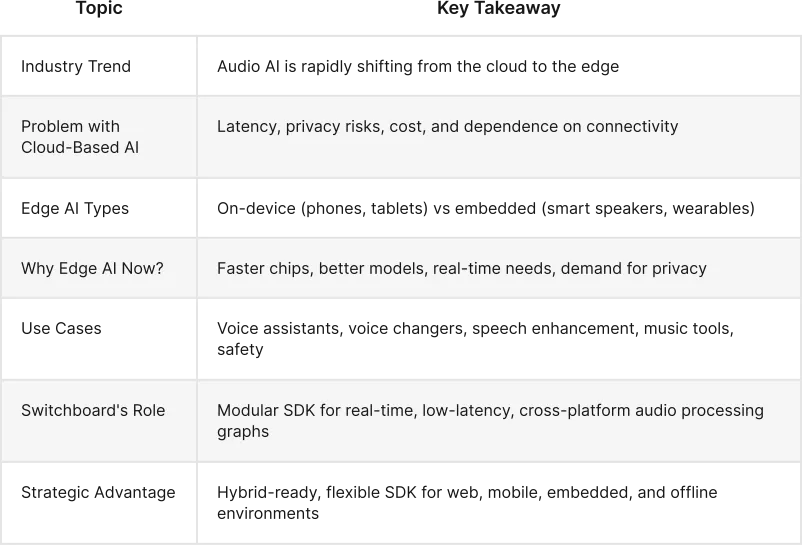

Why the Cloud Can’t Keep Up with Audio AI

Cloud-based audio AI brought us speech recognition, conversational AI agents, stem separation, voice changers, and real-time translation. But as usage grows beyond proofs of concept, the weaknesses of the cloud have become glaring:

Latency: Cloud roundtrips, especially for complex systems, add up and quickly become unacceptable in sensitive real time use cases including gaming, conferencing, and safety-critical apps.

Privacy: Sending voice data off-device creates security and regulatory headaches, and often becomes a blocker in enterprise use cases.

Cost: Streaming high-resolution audio to the cloud is expensive, power-hungry, and wasteful.

Connectivity Dependency: Even momentary internet loss breaks the experience.

Edge AI—running models locally on the user’s device—is rapidly becoming a critical part of the solution for real-time audio interactions.

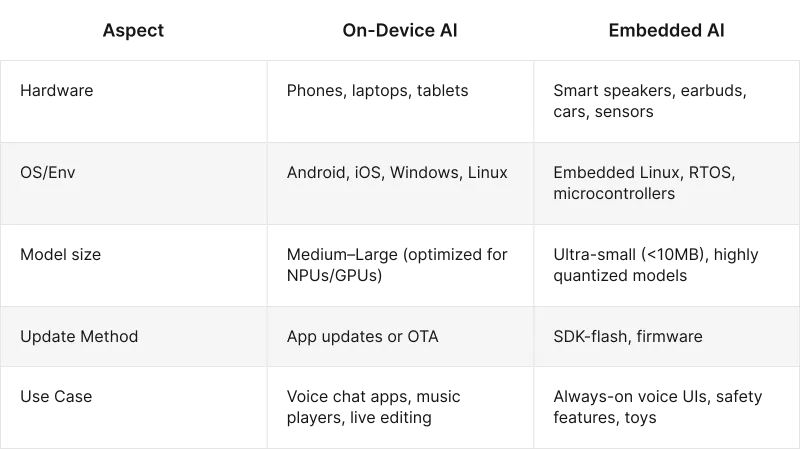

On-Device vs Embedded

One way to break down what constitutes “Edge AI” is by the hardware and OS it runs on. Most use cases could be classified into one of the two categories above. This distinction matters: designing for on-device AI means targeting user-facing applications, while embedded AI is ideal for low-power, passive, real-time environments like wearables, soundbars, or IoT.

What’s Driving the Shift to Edge Audio?

NPUs in Consumer Hardware: The Apple Neural Engine, Qualcomm Hexagon, and Google Tensor cores are making serious inference power available locally.

Smaller, Faster Models: Whisper, DistilHuBERT, and real-time Voice Conversion models now run under 100ms latency on mobile.

Developer Stack Maturity: Tools like ONNX Runtime, OpenVINO, and CoreML make cross-platform deployment more feasible than ever.

Edge SDKs: Platforms like Switchboard lower the barrier for building audio products with an edge-first runtime.

Real-World Use Cases for Edge Audio AI

Here are examples from both Switchboard’s customer base and the broader market:

Conversational Voice AI

Intelligent assistants that still work offline (hybrid)

Immediate feedback from small models that can communicate with other models

Hands-free / voice controlled applications

Gaming & Social

Real-time voice changers (e.g., child-safe filters or roleplay)

Local noise suppression and specific speaker detection

AI-based NPC voice control offline (agent-based speech-to-speech)

Music & Creator Tools

On-device stem separation (practice mode, karaoke)

Smart EQ and compressor graphs

Jam session sync over peer-to-peer networks

Communication & Conferencing

Local echo cancellation, gain control, and dynamic speech enhancement

Private transcription (e.g., confidential call logs)

Barge-in detection and AI agent switching

Automotive & Smart Devices

Voice command parsing without cloud connection

In-vehicle safety alerts based on sound context

Selective noise cancellation on embedded speakers

How Switchboard Makes Edge Audio AI Actually Buildable

While many SDKs focus on specific models (like transcription or stem separation), Switchboard provides a general-purpose audio graph engine and a modular SDK for building custom real-time audio pipelines:

Key Features

Graph-Based Audio Processing: Build and arrange processing nodes like STT, TTS, filters, DSP, media players, etc.

Modular Nodes: Plug-and-play architecture lets you add speech-to-text, voice changers, or noise reduction as needed.

Low-Latency Real-Time: Designed for interactive use cases like games, agents, and comms.

Hybrid Cloud + On-Device: Supports seamless fallback to cloud inference or augmentation.

Cross-Platform: Targets iOS, Android, WebAssembly, embedded Linux, and more.

Built-in Voice Activity Detection (VAD) and extensions such as Whisper, Silero, or OpenVINO-accelerated models.

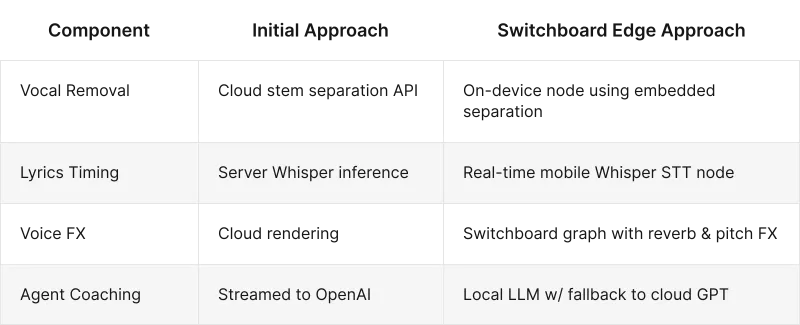

Example: From Cloud to Edge in One Graph

Say you're building a karaoke app. Here's how your pipeline might evolve:

With Switchboard, switching between these scenarios is just a matter of swapping nodes or flipping a config switch.

The Strategic Opportunity: Real-Time, Private, Offline-Ready

Building for edge means:

Your product still works in a tunnel, on a plane, or off-grid.

You control privacy: no GDPR or HIPAA issues from cloud audio leakage.

Latency is sub-100ms, not a second or more.

You gain platform independence from OpenAI, Google, or AWS.

This is especially powerful for:

Regulated industries (health, finance, defense)

Consumer electronics (audio devices, wearables)

Multiplayer and UGC platforms (gaming, social, virtual worlds)

AI Will Be Heard

Audio is the next frontier for Edge AI. Unlike vision, which has high bandwidth and can tolerate latency in many use cases (like photo tagging), voice is temporal and interactive—which makes real-time response non-negotiable.

Switchboard is designed to make this transition not just possible but easy, composable, and future-proof.

If you're building apps where audio is not just an output but a control surface, the edge is where your intelligence needs to live. And Switchboard gives you the tools to live there.